Chiranjeevi Maddala

February 14, 2026

AI data privacy for students is a comprehensive set of security protocols, regulatory frameworks, and technological safeguards designed to protect children’s personal information when they interact with artificial intelligence systems in educational settings. As schools integrate advanced learning technologies, understanding these privacy measures has become essential for administrators, teachers, and parents alike.

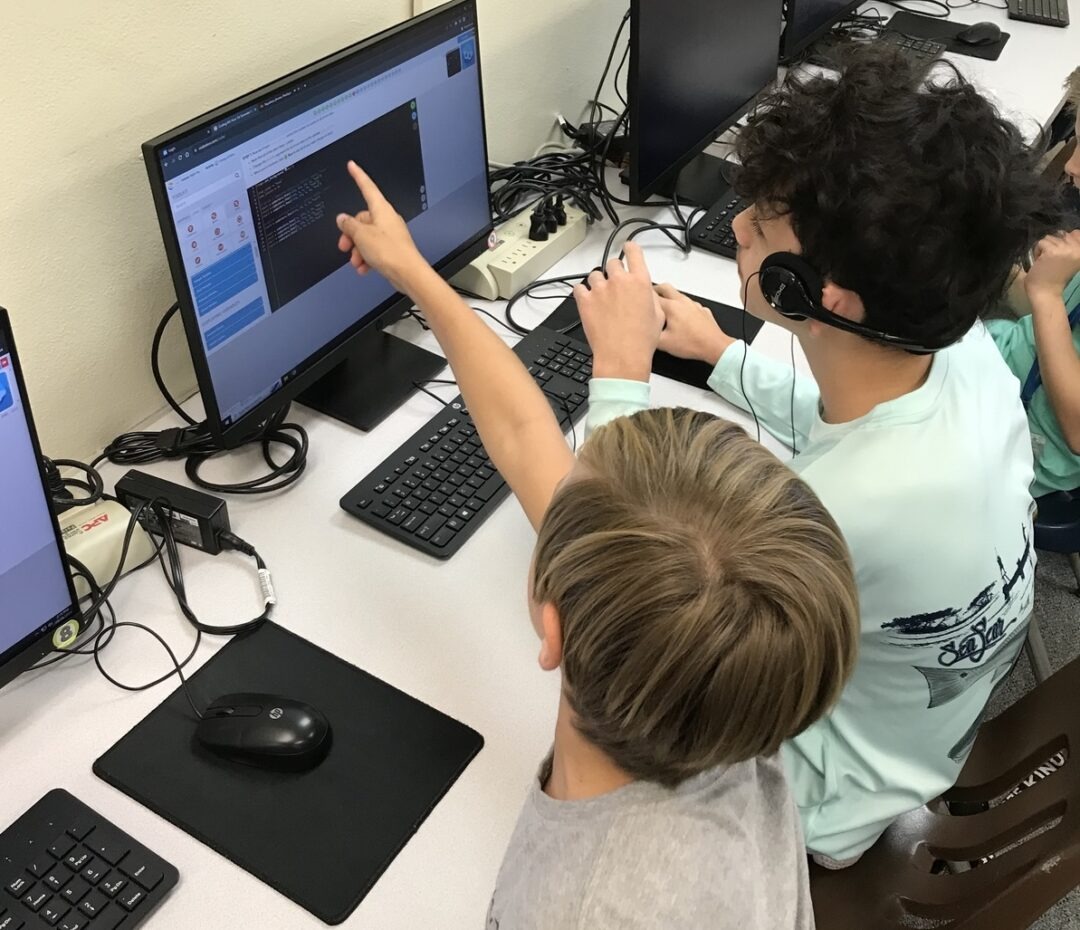

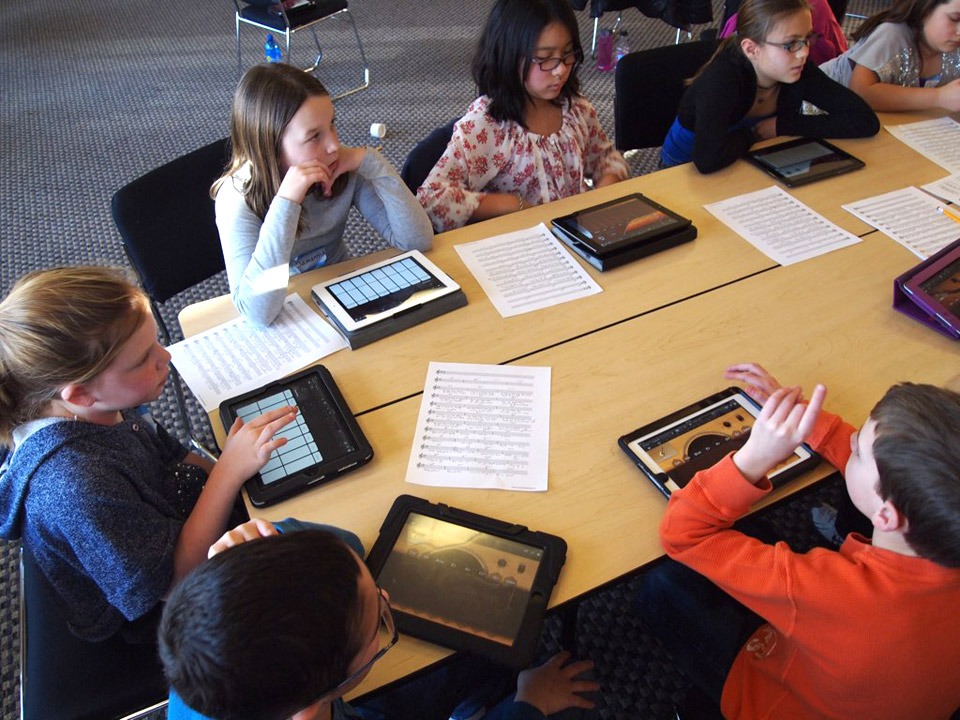

The rapid adoption of educational technology has transformed how students learn, but it has also created new vulnerabilities. When implementing a Personal AI Learning Companion for kids, institutions must prioritise data protection from day one.

These AI systems collect vast amounts of information—from learning patterns and assessment scores to behavioural data and personal preferences—making robust privacy frameworks non-negotiable.

Student anonymity remains paramount in any secure learning environment. Modern AI platforms should employ advanced data encryption methods that render student information unreadable to unauthorised parties. This technical safeguard works alongside legal protections to create multiple layers of security.

Educational institutions deploying AI for schools must navigate two critical regulatory frameworks:

When evaluating AI educational platforms, schools should verify the following critical privacy and security capabilities:

Data Collection

Traditional EdTech: Extensive tracking of all activities

Privacy-First AI: Minimal, purpose-limited data collection

Encryption Standards

Traditional EdTech: Often basic or transport-layer only

Privacy-First AI: End-to-end encryption with zero-knowledge architecture

Third-Party Sharing

Traditional EdTech: Frequently shared with advertisers or partners

Privacy-First AI: Strict no-sharing policies with contractual protections

Student Anonymity

Traditional EdTech: Names and IDs directly linked to profiles

Privacy-First AI: Pseudonymisation and anonymisation techniques

COPPA Compliance

Traditional EdTech: Inconsistent or minimal adherence

Privacy-First AI: Built-in consent workflows and age verification

Data Retention

Traditional EdTech: Indefinite storage is common

Privacy-First AI: Automatic deletion after the educational purpose is fulfilled

Parent Controls

Traditional EdTech: Limited visibility and control

Privacy-First AI: Full transparency with access and deletion rights

Creating a secure learning environment requires more than just choosing compliant software. Schools must develop comprehensive policies that address AI data privacy for students across all touchpoints.

First, conduct a thorough privacy impact assessment before deploying any new AI system. This evaluation should identify what data will be collected, how it will be used, where it will be stored, and who will have access.

Second, establish data governance committees that include IT security professionals, legal counsel, educators, and parent representatives to provide ongoing oversight.

The stakes for AI data privacy in education have never been higher. Beyond legal compliance, protecting student information is fundamentally about maintaining trust and creating conditions where learning can flourish.

Safety Imperative: Data breaches involving student information can lead to identity theft, cyberbullying, and long-term harm to young people. A single security failure can expose thousands of children to risk, making prevention essential.

Pedagogical Benefits: When students and families trust that their information is secure, they engage more freely with educational technology. This enables deeper learning, risk-taking, and authentic expression—critical for academic growth.

Future-Readiness: Today’s students will live in an increasingly data-driven world. By implementing strong AI data privacy practices, schools model responsible technology stewardship and teach students to value their digital rights.

Robust data encryption transforms readable student information into unintelligible code that only authorised systems can decrypt. Modern AI for schools should employ AES-256 encryption as a minimum standard, with encryption keys managed separately from the data itself.

Transport Layer Security (TLS) protects data moving between devices and servers, while at-rest encryption safeguards stored information. Some platforms now offer zero-knowledge encryption, where even the service provider cannot access unencrypted student data.

Protecting student anonymity while still enabling personalised learning requires sophisticated technical approaches.

These techniques allow schools to gain valuable insights without compromising individual privacy.

Parents deserve clear, accessible information about how AI systems use their children’s data. Privacy policies should be written in simple language and avoid legal jargon.

Schools should provide mechanisms for parents to:

Educational institutions procure AI systems from vendors—making vendor management critical.

Schools should:

Technology alone cannot protect student data—people and processes matter.

Choosing the right partner for your institution’s digital transformation is a decision that impacts every student and teacher. AI Ready School stands out because we don’t just provide software—we provide a secure, future-ready ecosystem.

We align our frameworks with the Bharat EduAI Stack, ensuring our tools meet the cultural and educational requirements of the Indian school system.

Our technology reduces the administrative burden on educators—giving them back 10+ hours every week to focus on teaching.

We implement the highest levels of student anonymity, encryption, and private cloud protection using a secure “walled garden” architecture.

Our flagship companion is an adaptive AI tutor that grows with every child—providing 24/7 support while maintaining 100% privacy compliance.

The future of education is AI-driven—but it must be safety-first.

Let us show you how we combine cutting-edge technology and rigorous privacy standards to create the ideal learning environment for your students.

Book a Demo with AI Ready School Today